What Are AI Agents? A Beginner’s Guide to Agentic AI!

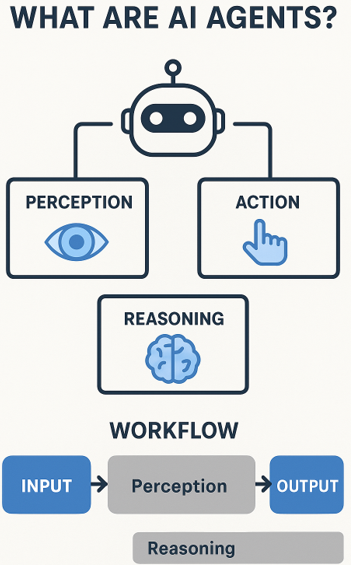

‘An agent can be described as a system that senses its surroundings using sensors and interacts with it through its actuators,’ states Stuart Russell & Peter Norvig, in their book “Artificial Intelligence: A Modern Approach”.

Food for Thought

Did you know that nearly 80% of organizations are already deploying AI agents in some form, and more than 60% expect returns on investment exceeding 100% from these deployments by the end of 2025? https://www.multimodal.dev/

With terms like agentic AI, autonomous agents, multi-agent systems, and AI orchestration gaining heavy traction, this post throws light on what exactly AI agents are — and why they are becoming the next big shift in how we build and use intelligent systems .

What is an AI Agent?

At its essence, an AI agent refers to a software entity that:

- Observes its environment (data, context, external signals).

- Reason about what it has observed.

- Decides on actions.

- Acts to achieve goals — often autonomously, or with minimal human supervision.

Agentic AI is the paradigm where AI systems aren’t just reactive tools but are proactive, goal-driven, and capable of managing multi-step tasks (possibly adapting over time) rather than just single, isolated prompts.

Key Characteristics of Agentic AI

Agentic AI systems stand apart from traditional AI models because of several defining characteristics:

Autonomy Agentic AI can operate independently without constant human direction. Once given objectives, agents can initiate tasks, make decisions, and execute actions on their own.

Reactivity These agents continuously monitor their environment, perceiving changes in real-time and responding appropriately. This makes them highly adaptable to dynamic contexts.

Proactivity and Goal-Orientation Unlike passive systems that simply respond to user inputs, agentic AI can plan ahead, anticipate user needs, and break down complex objectives into smaller, actionable steps.

Persistence and Memory Agents can retain context across multiple interactions. This means they remember past actions, user preferences, and previous outcomes, allowing them to improve over time.

Tool Use and Integration Modern AI agents can leverage external tools, APIs, and data sources. For example, they can fetch information from the web, interact with databases, or trigger workflows in third-party systems.

Learning and Adaptation Through reinforcement learning, feedback loops, or fine-tuning, agents can adjust their strategies, refine their performance, and evolve based on experience.

Agent vs Traditional Generative AI / Chatbots: What’s Different?

Traditional AI (e.g., large language models, standard chatbots) respond to prompts. They are typically stateless (or weakly stateful), do not plan ahead, and often carry out single tasks.

Agentic systems can orchestrate multiple steps, manage sub-tasks, maintain state/memory, and interact with multiple tools/systems.

Agentic AI tends to be more complex in architecture — requiring modules for planning, memory, environment modeling, execution monitoring, and safety or alignment constraints.

Examples & Use Cases

To make this more concrete, here are some real and emerging applications:

- Customer service automation — AI agents that don’t just answer questions but proactively identify issues, escalate when necessary, resolve claims, or follow up without human prompting.

- Supply chain & logistics — Agents that monitor shipments, adapt routes or schedules based on real-time traffic/weather, and manage inventories.

- Finance & Fraud Detection — Agents that continuously monitor transactions, flag anomalies, and initiate countermeasures autonomously.

- Vertical agents — Specialized agents for healthcare (medical coding, appointment scheduling), legal (contract review), education (adaptive tutoring), etc. https://research.aimultiple.com/agentic-ai-trends/.

Technical Underpinnings: How They Work

Technical Underpinnings: How They Work

Environment & Sensor Modules These gather inputs: could be APIs, user inputs, sensors, and knowledge databases.

Reasoning / Planning Layer Often using large language models + symbolic reasoning or hierarchical planning. This module decides what sub-goals or tasks are needed.

Tool / Action Modules Agents need to interface with external tools: APIs, scripts, databases, and UI automation.

Memory / Context Manager To store history, context, and state across interactions. Could be short-term (session-based) or long-term (persistent over days/weeks).

Monitoring, Feedback & Learning Tracking whether actions succeeded, getting feedback, and adjusting strategy. This may involve reinforcement learning, evaluation, and human-in-the-loop correction.

Safety, Governance & Control To manage risks: permissions, alignment with objectives, preventing unwanted behaviors, and audit trails.

Challenges & Considerations

Agentic AI is powerful but also comes with non-trivial challenges:

- Scalability of context / memory: Maintaining large, long-term context without consuming too much compute or storage.

- Alignment & Control: Ensuring the agent’s goals align with human values and business objectives; avoiding unintended behavior.

- Security & Privacy: Agents often require access to sensitive data or systems, which raises risks of misuse, data leaks or adversarial threats.

- Explainability & Transparency: It’s harder to interpret why an agent took a sequence of actions, especially if there’s planning + tool use involved.

- Cost vs Benefit: Many pilot or PoC (proof-of-concept) agentic AI projects fail to deliver sufficient ROI if not designed well.

Future Outlook

What to expect as agentic AI continues to evolve:

- More open-source frameworks to build agents and orchestrate multi-agent systems.

- Increased use of agents in consumer devices and embedded systems.

- Integration of agents with physical robotics or IoT for real-world interactions.

- Stricter standards & regulations around safety, identity, auditing of agents.

- Growth of agentic programming: tools and environments that allow human developers to specify goals/tasks, and the agent handles decomposition & execution.

Getting Started: How You Can Use or Build AI Agents

If you’re intrigued and want to experiment, here are steps you can take:

Pick a clear task / domain Start with something bounded: e.g. lodging customer support queries, scheduling, document summarization.

Define objectives & constraints What is a “good outcome”? Define metrics. Also define safety boundaries (“this agent should never…” rules).

Select or build tools Use an LLM that supports tool integration, or frameworks that allow plug-ins / APIs.

Design memory & context flow Store user preferences, past interactions. Decide what needs persistence vs what is ephemeral.

Iterate & monitor Run pilot, collect failures, refine. Use user feedback, logs, evaluation metrics.

Ensure governance & security Access controls, audit logs, testing, fallback procedures.

Example: Simple AI Agent with LangChain

Here’s a basic Python example that shows how to create an AI agent that can search the web and answer user questions.

# Install dependencies

# pip install langchain openai serpapi python-dotenv

from langchain.agents import initialize_agent, Tool

from langchain.llms import OpenAI

from langchain.utilities import SerpAPIWrapper

import os

# Set up API keys (store them in .env file for security)

os.environ[“OPENAI_API_KEY”] = “your_openai_api_key”

os.environ[“SERPAPI_API_KEY”] = “your_serpapi_api_key”

# Initialize language model (LLM)

llm = OpenAI(temperature=0)

# Set up a search tool for the agent

search = SerpAPIWrapper()

# Define tools for the agent

tools = [

Tool(

name=”Search”,

func= search.run,

description=”This is for searching the web to get up-to-date information”)

)

]

# Create the agent

agent = initialize_agent(

tools,

llm,

agent=”zero-shot-react-description”,

verbose=True

)

# Run the agent

query = “What’s the latest trend in agentic AI?”

agent.run (query)

How It Works:

- LLM: Handles reasoning and language output.

- Tools: Provide external capabilities (like web search, APIs).

- Agent: Uses both to plan actions, call tools, and give answers.

This is a single-agent setup. For multi-agent systems, you can connect multiple such agents with different tools or goals.

Final Words

AI agents represent a major evolution in how artificial intelligence can act—moving from passive prompt-response systems to proactive, autonomous systems that can plan, execute, and adapt over time. As more organizations adopt agentic AI, being aware of both its technical demands and its risks is key to building systems that are useful, safe, and valuable.

If you’re curious: could your next productivity app or tool actually embed an AI agent under the hood? The future is likely saying yes.