What are the latest Tech Trends dominating the IoT Landscape?

Significant Technology Trends in IoT and their Advantages

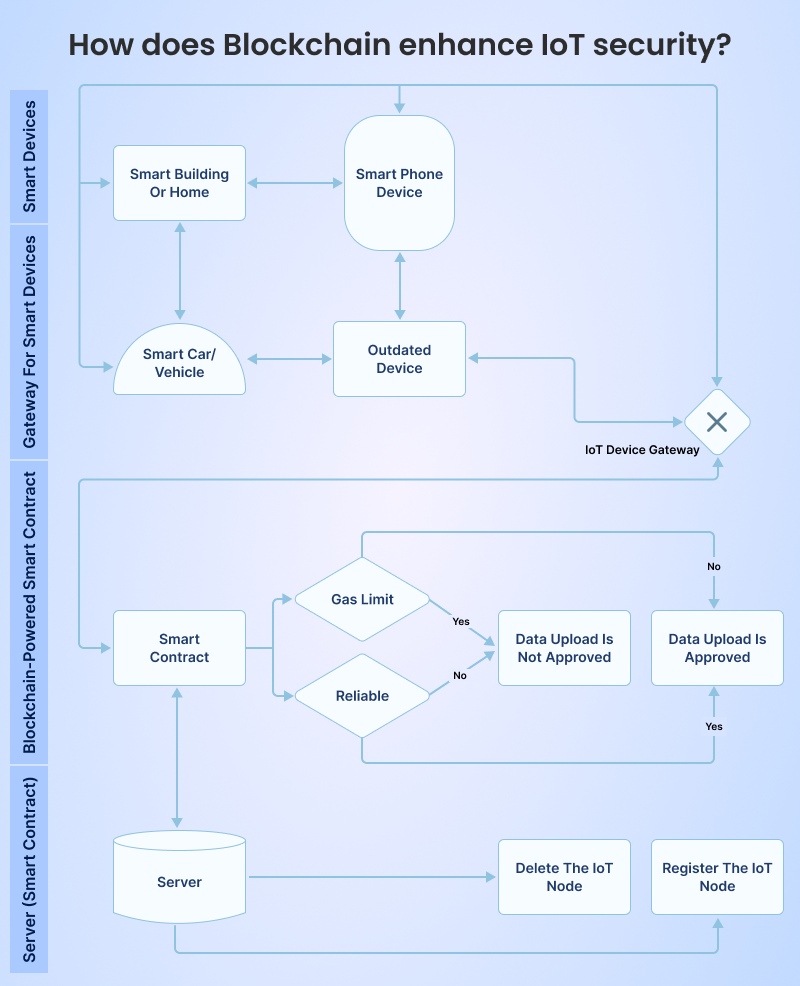

Blockchain Integration

Adoption of 5G Network

Edge Computing Integration

- Processing data locally means faster response times, which is crucial for applications that require real-time or near-real-time actions, such as autonomous vehicles or industrial automation.

- Transmitting only relevant or summarized data to the cloud helps optimize bandwidth usage. This is especially important in scenarios where bandwidth is limited or costly.

- This approach improves privacy and security as sensitive data is kept closer to its source, reducing the need to transmit sensitive information over the network. Also, edge devices can implement security measures locally, and there is a smaller attack surface compared to a centralized cloud architecture.

- Edge computing allows for better scalability as the load is distributed across edge devices. This is in contrast to a centralized cloud model that may face scalability challenges as the number of connected devices increases.

AI and ML Integration

AR and VR Integration

Digital Twins

Innovative Silicon Chips in IoT

Voice-activated Assistants

Are You Interested in Building a Top-Class Website or Mobile App?