“AI is not just about making predictions—it’s about making predictions we can trust,”states Cynthia Rudin, Professor of Computer Science at Duke University. This statement underscores a crucial point: trust is becoming just as important as innovation in AI. Here’s where Explainable AI comes into the picture! And before you confuse it with Generative AI, let’s be clear—both concepts are quite different.

Let’s break it down for better clarity:

As Artificial Intelligence unfolds, two prominent branches have captured attention for very different reasons: Explainable AI (XAI) and Generative AI (Gen AI). Generative AI wows us with its ability to create new content—whether that’s text, images, or even music—while Explainable AI grounds us with transparency, making sure humans understand the “why” behind AI’s decisions. Together, they illustrate the balance between creativity and clarity that defines the future of AI.

Let’s explore the concept of Explainable AI and how it is different from GenAI!

A Quick Comparison between GenAI and XAI

Generative AI (Gen AI)

What it is: A branch of AI focused on creating new content (text, images, code, audio, video, etc.) based on patterns learned from large datasets.

Examples: ChatGPT generating essays, MidJourney creating images, GitHub Copilot writing code.

Key characteristic: It produces novel outputs that did not exist before, often mimicking human creativity.

Use cases: Content creation, chatbots, image generation, product design, drug discovery, personalized experiences.

Explainable AI (XAI)

What it is: A collection of approaches and tools designed to make AI models’ decisions easier for humans to interpret and understand.

Goal: Help people understand why an AI system made a particular decision or prediction.

Examples: A medical AI explaining why it flagged a tumor as malignant, or a credit scoring AI showing which factors led to loan rejection.

Key characteristic: It focuses on transparency, trust, and accountability rather than generating content.

Use cases: Healthcare, finance, legal systems, any high-stakes decision-making where humans must trust AI.

Why is Explainable AI important? What roadblocks does it resolve?

Modern AI systems, especially those using deep learning,often act like “black boxes,” producing accurate predictions without showing why and how a model reached that result. This lack of interpretability creates challenges in industries like medicine, law, finance, where decisions impact lives.

Use Case Examples

Healthcare – Doctors need to know why an AI system flagged a certain diagnosis. For instance, if an AI predicts that a tumor is malignant, XAI tools can highlight the features or data points (such as tumor size, shape, density, or texture patterns) that influenced this decision. This ensures that medical professionals can validate AI outputs before taking clinical actions, reducing the risk of misdiagnosis.

Finance – Loan approvals must be explainable to avoid biased claims and meet regulatory compliance. For example, if a loan application is rejected, XAI can reveal which risk factors—such as credit score, income stability, employment history, or debt-to-income ratio—led to that outcome. This level of transparency builds trust with customers and ensures fairness in lending practices.

Autonomous Vehicles – Self-driving cars rely on real-time AI decision-making for navigation, obstacle detection, and accident prevention. XAI helps engineers and regulators understand the decision logic behind actions like sudden braking, lane changes, or object avoidance, improving safety, accountability, and system reliability.

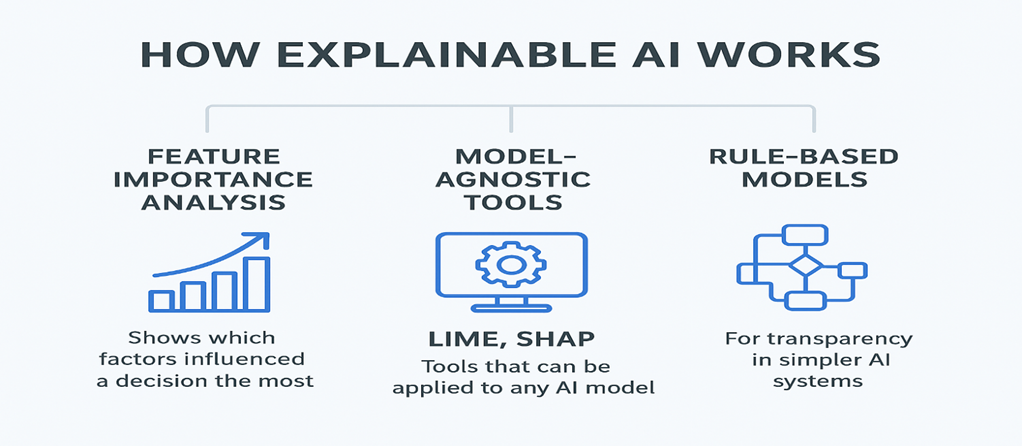

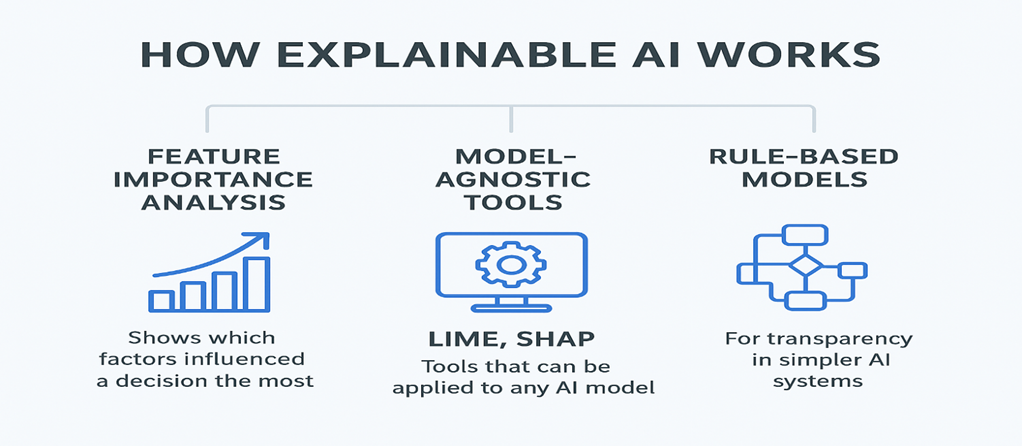

How XAI Works: Methods and Techniques

Explainable AI uses a range of techniques to make AI decision-making transparent, interpretable, and trustworthy. Some of the key methods include:

Feature Importance Analysis

This technique ranks the input features (variables) of a model based on how much they influence the output decision. For example, in a loan approval model, feature importance analysis might show that credit score contributes 40%, income stability 30%, and debt-to-income ratio 20% toward the decision, while other factors play a minor role. This helps stakeholders understand which inputs matter the most and allows them to detect potential bias in the model.

Model-Agnostic Tools

These tools work across different AI models (black-box or white-box) without depending on their internal architecture, making them versatile and widely used. Two popular methods are:

LIME (Local Interpretable Model-Agnostic Explanations)

LIME explains individual predictions by creating a simpler, interpretable model (like linear regression) around the specific data point. For instance, if an AI predicts that a patient has diabetes, LIME generates a simplified explanation showing how glucose level, BMI, and age influenced this decision. It focuses on local interpretability and defines the reason why the decision was made for a specific case.

SHAP (SHapley Additive exPlanations)

SHAP applies principles from game theory to determine how much each feature contributes to the final prediction. It calculates the impact of including or excluding each feature on the final output, ensuring a global and consistent explanation of the model’s behavior. For example, in a credit scoring model, SHAP might show that payment history increases the likelihood of loan approval by 25%, while a high debt ratio reduces it by 15%.

Rule-Based Models

Unlike complex black-box models, rule-based systems follow if-then logic or decision trees, making them inherently interpretable. For instance, a simple rule for a health risk model could be: If cholesterol > 250 and age > 50, then risk = high. While not as powerful as deep learning models, rule-based approaches are often used in regulatory environments where transparency is mandatory.

Why Both GenAI and XAI Matter in the AI Landscape

Explainable AI builds trust and compliance in industries where accountability is crucial. For example, in healthcare, an AI system recommending a treatment plan must justify its choices to doctors and regulators. Generative AI boosts innovation and efficiency by simplifying tasks such as content creation, design, and prototyping, cutting down the time and manual effort required. Combined, they enable safe and innovative AI adoption—imagine a generative AI tool for medical research whose decisions are fully explainable using XAI principles.

Future Outlook: Balancing Creativity with Clarity

The next era of AI will focus on combining innovation with clear, explainable decision-making:

- Regulatory pressure will demand explainability in critical sectors.

- Hybrid models may integrate generative capabilities with explainability layers, ensuring users not only see creative outputs but also understand how and why they were generated.

- Ethical AI development will prioritize bias detection, fairness, and reliability, making both XAI and Gen AI vital for responsible AI deployment.

In a Nutshell

While Generative AI fuels innovation with its ability to create, Explainable AI ensures trust and accountability by making AI decisions transparent. Both play unique roles in shaping the AI-driven future, and their combined power will define how businesses, regulators, and individuals interact with artificial intelligence in the years ahead.

Share your thoughts in the comments—how do you see XAI and Gen AI shaping the future? Connect with us to learnhow AI can transform your business!