If you’re evaluating AI agents for your business, the first question is rarely “Can this work?” The real question is: “Can this work without creating new risk?”

This is because nobody wants an automation success story that turns into an accountability mess. When a workflow breaks, a customer escalates, or a compliance flag appears, leaders don’t want to hear, “the model decided.” They want human control by design, with AI doing the heavy lifting and people keeping the steering wheel.

That’s exactly where AI agents are headed in 2025: reducing manual work dramatically while keeping humans firmly in charge.

Why “agentic” work is rising, but “hands-off” is still a myth

AI agents are different from chatbots. A chatbot answers. An agent can plan, decide, and execute steps across systems: pull data, draft outputs, route tasks, open tickets, reconcile records, generate reports, and trigger follow-ups.

But full autonomy isn’t what most businesses actually need. What they need is speed and consistency, without giving up supervision.

A useful reality-check comes from Gartner’s customer service research:only 20% of customer service leaders report AI-driven headcount reduction. This means most organizations are using AI to augment work rather than replace people outright.

That’s the point. AI agents reduce workload, improve throughput, and increase quality, but human oversight remains the operating model.

That’s the point. AI agents reduce workload, improve throughput, and increase quality, but human oversight remains the operating model.

So, what does “human control” look like in an AI-agent workflow?

Not a vague promise. It’s a set of concrete system behaviors.

Human control means:

- The agent can act, but only within defined boundaries (permissions, budgets, policies).

- A human can approve, override, or roll back actions.

- Every action is logged with context (what it saw, what it decided, what it changed).

- Exceptions and high-risk decisions are automatically escalated.

If you’re a founder, CTO, or operations leader, you should be asking:

Which are the specific points in a workflow where human judgment is mandatory, and which are the ones that can proceed by default?

That one question determines whether your AI agent becomes a productivity asset or a governance headache.

The practical model: AI does the work, humans make the call

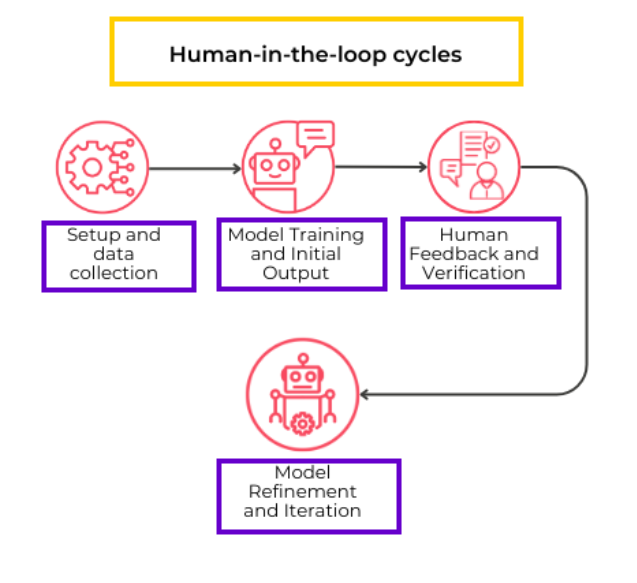

The winning pattern in 2025 is Human-in-the-Loop (HITL) and Human-on-the-Loop (HOTL).

Human-in-the-Loop: AI prepares, drafts, recommends, and routes; humans approve key steps.

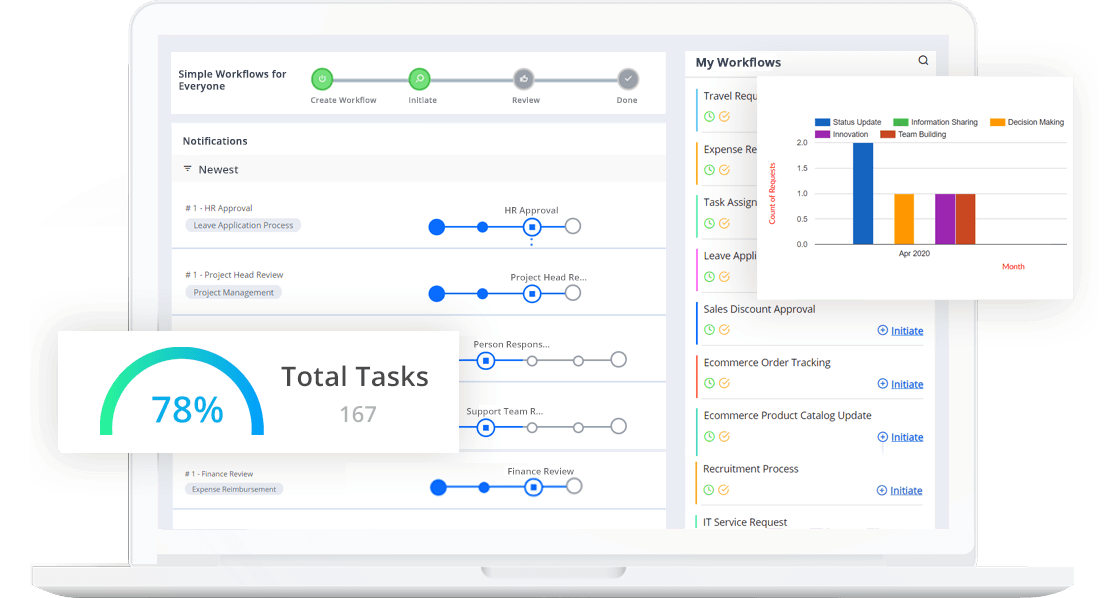

Human-on-the-Loop: AI executes routine steps autonomously; humans supervise via dashboards and intervene when thresholds are crossed.

This is where AI agents shine; they remove repetitive effort without removing responsibility.

Where AI agents cut manual work the fastest

You don’t start by letting an agent “run the business.” You start where work is repetitive, rules exist, and exceptions can be escalated.

High-ROI examples (across industries) include:

Customer support operations: The agent summarizes tickets, suggests replies, retrieves policy references, drafts resolutions, and escalates edge cases.

Sales and CRM hygiene: The agent captures leads, enriches records, logs calls, drafts follow-ups, and routes opportunities while humans decide what to pursue.

Finance operations: The agent matches invoices, flags anomalies, prepares reconciliations, and drafts narratives for variances while finance approves.

HR workflows: The agent screens resumes against structured criteria, schedules interviews, and drafts candidate communications while recruiters make hiring decisions.

Procurement and vendor management: The agent compares quotes, drafts vendor emails, and prepares evaluation summaries while procurement signs off.

Notice the pattern: the agent handles volume and speed; humans own judgment and accountability.

The control mechanisms leaders should demand before going live

If you’re planning custom AI agent development, don’t approve a build that can’t answer these questions clearly:

1) What can the agent do, and what is it explicitly not allowed to do? Define permissions like you would for a human user. Least privilege wins.

2) Where are the approval gates? Decide which actions require confirmation: refunds, contract changes, regulatory decisions, customer account actions, pricing changes, and any “point of no return.”

3) How do we detect and handle exceptions? Agents perform best when the system treats exceptions as a first-class feature: auto-escalation, queueing, routing, and SLA timers.

4) Do we get a full audit trail? If you can’t replay what happened, you can’t manage risk, compliance, or disputes.

5) What’s the fallback when the agent is unsure? The agent should not guess its way through high-stakes steps. It should ask, escalate, or pause.

This is what “human control” actually means in production: not fewer humans, but better human leverage. Real-world implementations already show how this balance works at scale: our enterprise AI agent workflow example illustrates how manual effort can be reduced without losing human control.

The hidden reason AI agents fail is messy systems, not “bad AI.”

Designing AI-powered workflow systems that scale safely requires more than models; it requires strong data engineering, secure integrations, and thoughtful system design.

Many agent projects stall because the organization is trying to automate chaos. The process isn’t stable, the data isn’t trustworthy, and the systems aren’t integrated.

Before building agents, teams need a clean foundation: well-defined workflows, reliable data sources, role-based access, secure integrations (CRM, ERP, helpdesk, LMS, etc.), and event tracking and logs.

AI agents are not just an AI problem. They are software architecture + workflow design + governance.

What to build in 2025 if you want agent ROI without risk

If you want real impact without overreach, build agents as part of an operational system, not as standalone demos.

The practical roadmap looks like this:

- Start with one workflow that is high-volume and measurable.

- Build the agent as “assist-first,” with approvals.

- Instrument everything: logs, metrics, failure reasons, and escalation paths.

- Expand autonomy only when performance is proven and risk is contained.

This is how organizations reduce manual work while keeping control; autonomy is earned, not assumed.

Final thought

AI agents are not about removing humans from work. They’re about removing humans from repetitive work; so, they can focus on decisions, relationships, creativity, and accountability.

If you’re planning an agent initiative in 2025, here’s the leadership question that matters most:

Which parts of the process benefit from speedy execution, and which demand deliberate human validation?

Answer that well, and you’ll build AI agents that increase productivity without creating a trust problem.